DeepSeek-R1 Initial Notes

While I've been traveling, little-known Chinese research lab Deepseek released an open-source model that can compete with the best closed-source products OpenAI and Anthropic have to offer. Everyone appears to be freaking out about it.

The reason for this is its astonishingly low training cost compared to performance (it reportedly cost $5 million dollars to train), the fact that it's open-source, and even if you choose to pay for its API, it's about 90% cheaper than its American competitors. The model is also available to try for free immediately. This is all possible because of how much cheaper the model is to run than other frontier models. Figuring out a way to make it so cheap was necessary for DeepSeek, in large part because of the lack of GPUs available to Chinese companies: the compute and training optimizations used to make it so cheaply are above my paygrade but I'm told they're pretty nuts.

Sam Altman quickly responded by announcing making o3 available on ChatGPT's free tier. Love to see money go out of that man's pocket, no matter how it happens, and this one had to be a tough blow. o3 was released two weeks ago and he's already being price gouged by a random quant firm from China.

This is all just desserts. OpenAI went from a nonprofit focused on AI research to one of the biggest unicorns in recent memory by completely betraying their initial mission statement:

OpenAI is a non-profit artificial intelligence research company. Our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. Since our research is free from financial obligations, we can better focus on a positive human impact.

Under Sam Altman's leadership, it pivoted from its nonprofit status to becoming a for-profit organization, after an attempted coup over Altman's accelerationist mindset failed and resulted in the departure of most of the people in the organization who were, you know, concerned about the effect releasing this technology into the world would have. The ship of adequately safety-testing LLMs before releasing them into the wild has already sailed - Gemini is still hallucinating plenty of its search results - but it's funny to see a Chinese company end up carrying the torch of the other part of OpenAI's mission, open-sourcing cutting-edge research instead of gating it and charging a premium.

DeepSeek is the stupid prize America and OpenAI won for playing stupid games. Throttle the NVIDIA imports to China and people will come up with a more energy-efficient way to train models. Create a moat around your models and the open-source world will leave you in the dust. The broader political implications of this remain to be seen, but given the far-right turn of American tech firms over the past year, I think it's a net good for other countries to find ways to compete with us. And the open-source possibilities of AI are the only thing that give me some degree of hope about its long-term future: maybe it'll eat all the jobs, but at least Sam Altman and his ilk won't get to run the monopoly doing it?

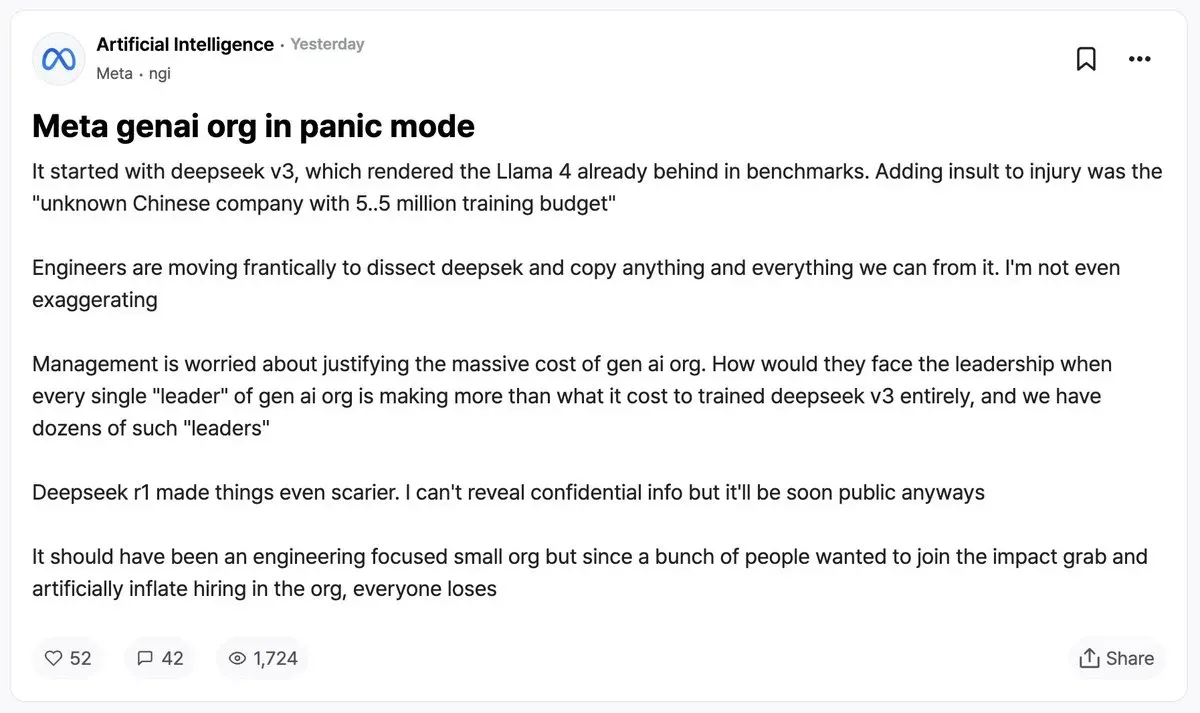

It's nice to see OpenAI eat some crow, but even in the open-source AI world, DeepSeek has started fires everywhere. Facebook employees on Blind are reporting that they're being asked to justify the massive cost of their GenAI research arm when DeepSeek could do it with a fraction of the cost and the staff. I am not, writ large, a fan of Facebook, but they have been leading the charge on open-source AI with LlaMa. I hope this doesn't push them too far in another direction, but if I know Mark Zuckerberg, he's always looking for a reason to lay people off.

I haven't actually spent any time with the model yet (spending time in the world with loved ones while out of New York has been a higher priority), so we'll see if this is the model that finally gets me to invest more time in an LLM-aided workflow. But regardless, some general takeaways:

- Anything making OpenAI this uncomfortable is probably doing something right.

- It's likely that some of DeepSeek's claims are questionable, or their funding sources less-than-ideal. Nobody's producing a model like this in an "ethical" way. I am well aware their model is censored for the benefit of the Chinese government. In one way or another, every model out there does censorship, and I don't think we're far off from GPT being anti-woke given Altman's cozying up to Trump.

- The promise this opens up for the possibilities of running frontier LLMs locally could mean a lot when it comes to security, further open-source development, and all the things I'd like to see more of in any new technology: preventing it from turning into either a walled garden or a panopticon.

Update:

I had not seen this take on it at the time of writing - generally, I think it's probably for the best that I write without scouring the internet for something I didn't think of and end up deciding I'm an idiot and not writing anything - but it's an angle that seems worth noting: plenty of folks are seeing the apoplectic reaction to DeepSeek as another form of fearmongering designed to get more dollars into OpenAI et al's coffers. Others are saying that we should be focusing on the question of whether or not it should exist at all, and not fawning over the fact that it's open source.

My thoughts and feelings on AI at this point in time could probably fill a book, but to keep it short, in my dotage I have become something of a "both sides" guy. People are getting value out of these tools whether I like it or not, and as far as I can tell they're not going anywhere. I am willing to buy that some applications will be, or maybe already are, making the world better. And small, local models that cost less to train do a lot to address the environmental concerns people have, if not the ethical ones.

From a more cynical angle, if AI is going to be forced down our throats anyway, I'd rather it be open-source to give us the opportunity to understand what it's doing and how to counteract it. Saying we shouldn't give it any of our attention seems like wishful thinking at this point. And, lord help me, I have never stopped finding this technology deeply interesting, even at the times when I've been most blackpilled on it.

All that said, I think the incentive structures around AI - to make a spammier internet, to make people more reliant on an algorithm that by its nature is always lying, and to enrich some of the most awful people on the planet - shouldn't be handwaved away just because people are getting excited about tools or open source or whatever. So nobody's necessarily wrong here, in my book. But buying into breathless hype, and forgetting that the profit motive tends to lead to all technological innovation being applied in the worst way imaginable, is best avoided.