Interactive Tech Observations and Digital/Analog I/O Lab

For my interactive tech observations, I chose the ATM across the street from the store where I work. I figured it would be a perfect position to observe without being noticed. My general assumption was that people would use the ATM the way I use it: out of annoyance, to get cash when they need it in a hurry. This assumption seems to be mostly correct, at least in this context.

Unfortunately, what I didn’t count on was that, even after watching for several days, I almost never saw anyone use it. Only two people used it while I was watching, and of those there were no particularly unique activities to take note of. I will say it seemed like a painstaking process, and a painful one: it takes a while, especially if you’re doing more than one thing, and of course seeing your bank account balance is rarely a pleasant experience. The average transaction time was a minute and a half.

In light of the readings, I’m curious about how this generally negative experience could be made more pleasant. Increasing the ease of use would be good: finding a way to not force them to enter a PIN code, decreasing the amount of screens one has to scroll through. I’m also curious if there are ways that the experience of taking out money can be made more pleasant — maybe offering the user words of wisdom, or showing them ATM usage statistics. Using voice commands, or a less hostile screen, might help as well. Of course, the biggest issue with these ATMs is that they cost money to use.

The Lab

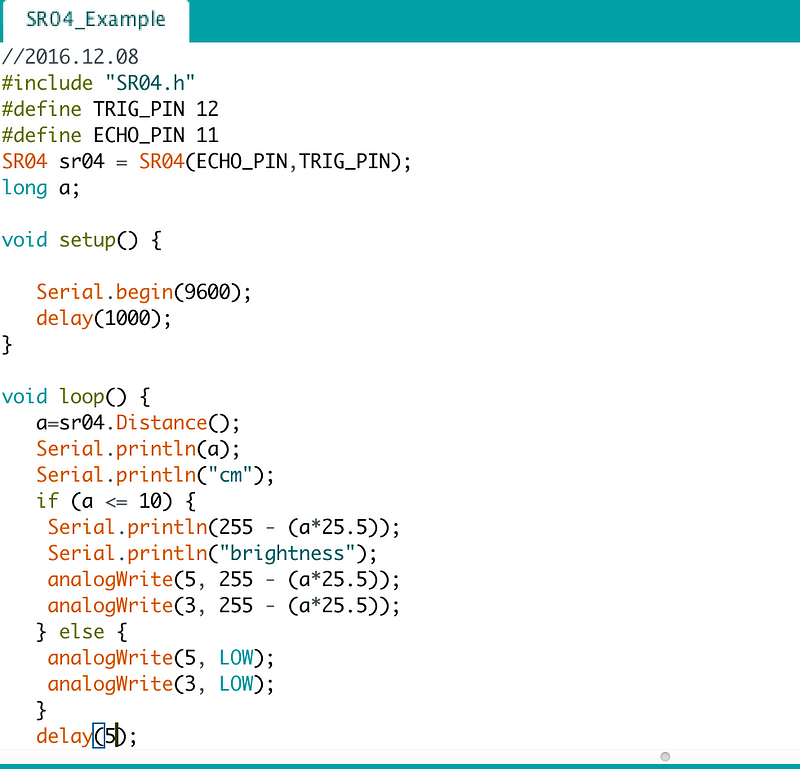

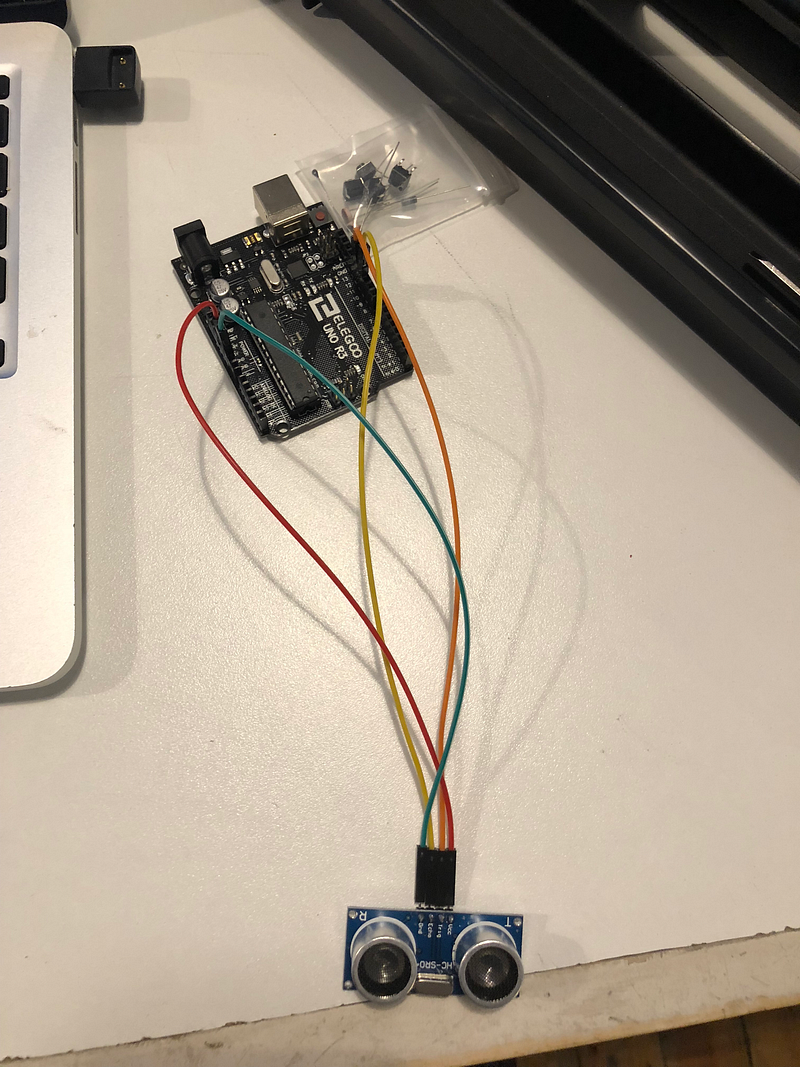

For this week’s lab, I decided to do the very beginning of a longer-term project I’m interested in. I’d like to create a fully interactive sculpture, possibly using Alexa or a similar voice assistant. However, to get there I have to start small. One thing I’m interested in doing is making something on the spookier side, especially given that our midterm project is Halloween-themed: my plan is to build a haunted bird skeleton — use servos to move its wings and head and pull it up and down, use motion tracking for head motion. The first thing I’d like to do, that I felt matched well with this week’s lab, is activate LEDs in its eyes to grow brighter as someone approaches. I used the motion sensor that came with my arduino to do this: basically, I increased the brightness being sent to the LED (which has a maximum of 255) by a tenth every 10 centimers closer to the sensor my hand got.

I wired my arduino to the motion sensor, then used its physical output to give variable input to the LEDs.

I managed to get the circuit working pretty quickly, but I realized that my sensor was far too weak to really accomplish what I wanted (actually increasing the light slowly as someone approaches). In the morning, I plan to add a more fun element to this by sticking the LEDs in something cool to react to people, but I thought I’d go ahead and post the basic prototype. I’m realizing that I’ll definitely need to invest in a better sensor before I go much further with this project.

Morning Update:

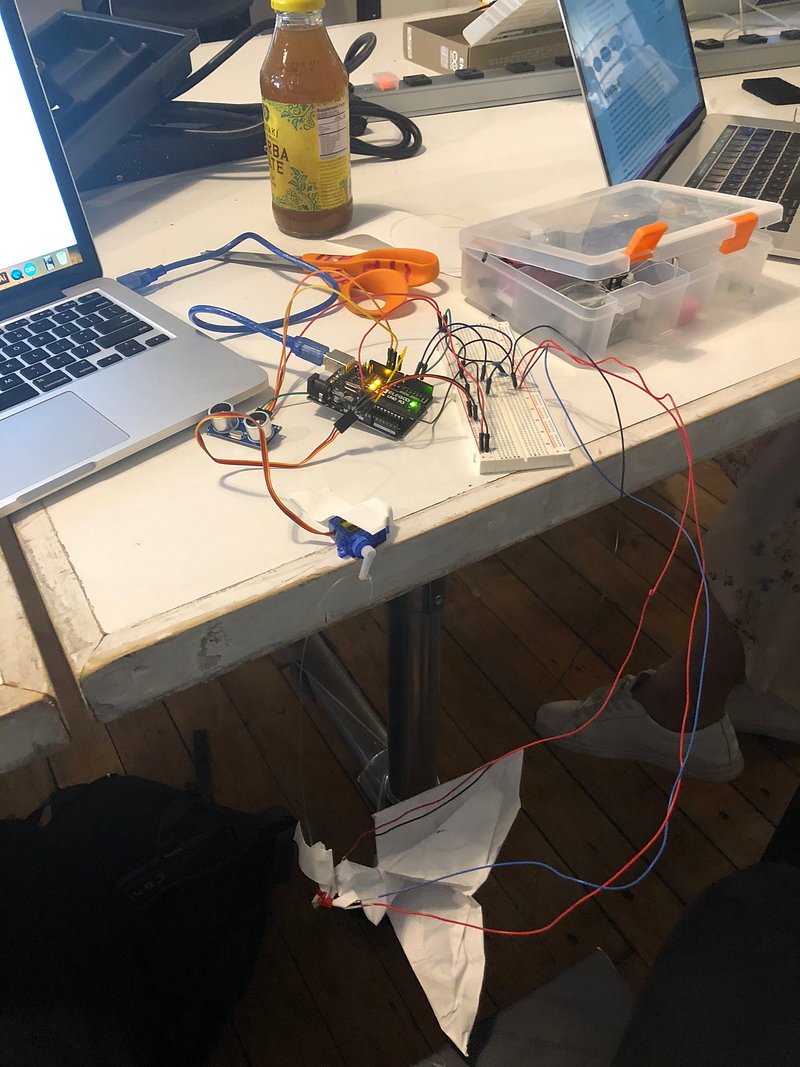

I managed to meet two goals today: I added the LEDs I’d been using to a physical object (an extremely poorly made origami crane), and attached it to a servo so it also reacts physically to the observer. Making the origami crane was the hardest part — I gave up after a while and accepted using a monstrosity instead. Once I set up the servo, making it reactive was simple enough — I simply had it turn 1/10th of its range per centimeter closer the viewer was to the sensor. You can see the extremely upsetting finished product here: