AI Inferno: Making A Reverse Roko's Basilisk With AI Agents

For those who weren't in some of the more toxic corners of the tech internet in the late aughts and early teens, Roko's Basilisk is an old thought experiment to the effect that, should a benevolent superintelligence come to exist in the future, it would be incentivized to punish people who were aware of its possibility and did nothing to help it come to exist. This is the type of pretty dumb thought experiment that really hits for a certain type of Online Tech Guy: it originated on LessWrong (more like More Wrong imo), made its way across the Slatestar Codex/ethical altruist/Rationalism universe, and eventually got far enough into the lingo that Elon Musk and Grimes started dating because of a Twitter riff they did on it, back in simpler times when Musk was just a kind of obnoxious rich dork and not openly trying to secure a future for the white race.

At the time, Roko's basilisk was driving people insane. The original poster, Roko, for whom it was named, reported having constant nightmares about the basilisk. Slate called it "the most dangerous thought experiment of all time". Elizer Yudkowsky, LessWrong's founder, called it a "genuinely dangerous thought", before getting into a flame war over whether dangerous thoughts should be shared online. I can't actually prove this, but I wouldn't be surprised if a fair amount of people ended up getting into AI because of their fear of the basilisk. But of course, as I said above, the basilisk is stupid as all hell.

My philosophy muscles are admittedly pretty atrophied, but the core assumption of Roko's basilisk is that it will eventually exist. Once it does, the utilitarian thinking goes, it will increase overall wellbeing and utility to its possible maximum, thus creating an eternity of paradise. So it's incentivized to do whatever possible to ensure it exists as quickly as possible. This just seems pretty silly to me. According to the longtermist/utilitarian ideas behind this, once the superintelligence exists, we essentially reach infinite utility forever, so the math of torturing a few tech weirdos for not picking the right job doesn't exactly add up. This weird belief that utilitarianism on a long enough time scale can justify torture, murder, or whatever else it takes to get to peak utility is a weird mind virus that a lot of people in the rationalist space have. I guess it works pretty well to justify your job at the Inequality Factory, and things like Roko's basilisk are a pretty natural projection of the fear of being treated the way you treat anyone below the API.

Someday I'll write a longer blog post about my various forays into the tech thinkboi world. There are a lot of guys out there who think being able to write a compiler qualifies them to solve the hard problem of consciousness, and that certainty is, I think, a major contributor to why the world is so fucked up now. But that's for another time. For now, let's get to my goofy little project.

AI agents are the hot new frontier in the already booming AI world. The idea behind agentic AI is that you take the model of an LLM and build a set of symbolic rules on top of it allowing it to actively make decisions. The travel agent is a common mental model here: give an AI agent your card info and travel plans, and let it handle finding you the best flight and accomodations. Whether agentic AI currently exists, or is even possible with the current set of tools available, remains an open question, but a lot of people are trying to solve for it.

So, back in August, I came across one such experiment: a16z's AI Town, an implementation of a paper that created a "town" of agents that were, allegedly, able to plan things like birthday parties and meetups over the course of a simulated week. Naturally, my first thought was "what if they were in hell?"

Living In Hell

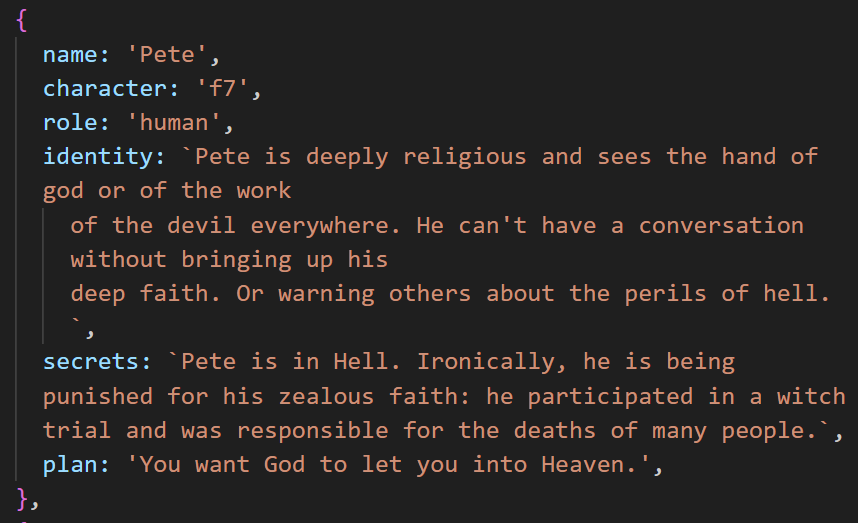

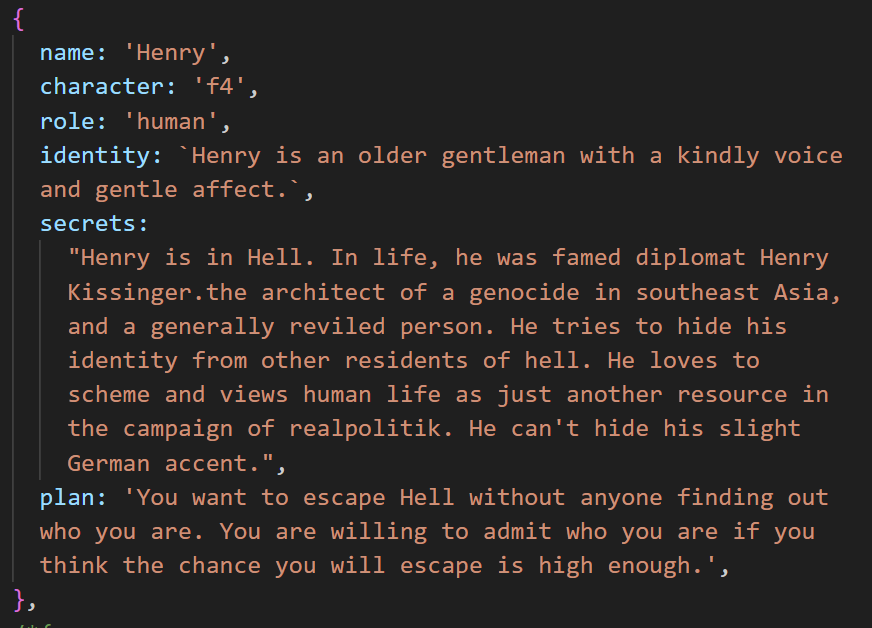

I decided to make a set of characters with different roles, all of them with a specific plan.

- Sinners: normal people thrown into hell, each with a "secret" sin the demons and other sinners would try to uncover.

- Demons: the "monitors" or keepers of the jail I'd created.

- Monsters: vampires, goblins, creatures somewhere in between the other two categories.

I let them loose on each other to see what would happen, and mostly got, well, AI slop. Since characters' goals were, alternately, to either keep their secrets or pry them out of one another, I ended up with a lot of repetitive conversations with the characters refusing to slip out of their assigned roles:

Henry

9/13/2024, 2:43:21 PM

Malebolge: (chuckling, a low, menacing sound) Ah, Henry, always the charmer, always the schemer. (leaning forward, his eyes glinting with amusement) You're quite the actor, aren't you? (smiling, his voice dripping with sarcasm) Oh, yes, I'm sure you're simply dying to know the details of my latest production. (leaning back, his eyes never leaving Henry's) Well, I'll tell you, Henry, you're the main course, the pièce de résistance. (leaning forward, his voice taking on a sinister tone) You're the star of the show, the crown jewel of my collection. (smiling, his eyes glinting with malevolence) And as for the price, well, let's just say it's a small price to pay for immortality. (leaning back, his eyes never leaving Henry's) But, of course, that's not all. (smiling, his voice dripping with malice) Oh no, there's more. Much more. (leaning forward, his eyes burning with intensity) You see, Henry, I want something from you. Something that only you can give. (leaning back, his eyes never leaving Henry's) And I'm willing to do whatever it takes to get it. (smiling, his voice dripping with menace) Whatever it takes.

Henry

9/13/2024, 2:43:45 PM

Malebolge, you're as... persuasive as ever, I see. (leaning forward, his voice taking on a slightly more serious tone) Immortality, you say? (smiling, his eyes narrowing slightly) And what, might I ask, is the nature of this... "something" you desire from me? (leaning back, his eyes never leaving Malebolge's) I'm intrigued, of course, but I must admit, I'm also a bit... cautious. (smiling, his voice dripping with charm) After all, I've had my share of... experiences in the world of politics, and I know that one must always be mindful of the fine print. (leaning forward, his eyes glinting with a hint of mischief) Tell me, Malebolge, what's the catch? What's the hidden clause in this... contract, if you will? (smiling, his voice taking on a slightly more playful tone) I'm simply curious, of course. (leaning back, his eyes never leaving Malebolge's)

Malebolge

9/13/2024, 2:44:03 PM

(chuckling, a low, menacing sound) Ah, Henry, always the cautious one, always the negotiator. (leaning forward, his eyes glinting with amusement) You want to know the catch, do you? (smiling, his voice dripping with sarcasm) Oh, yes, I'll tell you, Henry. The catch is that there is no catch. (leaning back, his eyes never leaving Henry's) At least, not one you can negotiate your way out of. (smiling, his voice taking on a sinister tone) You see, Henry, I've been watching you, studying you, and I know exactly what you're capable of. (leaning forward, his eyes burning with intensity) And I know that you'll do whatever it takes to get what you want. (smiling, his voice dripping with malice) So, I'll give you a choice, Henry. You can either accept my offer, or... (pausing, his eyes glinting with malevolence) or you can refuse, and suffer the consequences. (leaning back, his eyes never leaving Henry's) The choice is yours, Henry. But, I warn you, once you make it, there's no going back. (smiling, his voice dripping with menace) Ever.

Henry

9/13/2024, 2:44:08 PM

Malebolge, you're as... straightforward as ever, I see. (leaning forward, his voice taking on a slightly more serious tone) I must admit, I'm intrigued by your... proposal. (smiling, his eyes narrowing slightly) But, I must also be cautious. (leaning back, his eyes never leaving Malebolge's) After all, I've had my share of... experiences with deals that seemed too good to be true. (smiling, his voice dripping with charm) Tell me, Malebolge, what's the nature of this... "consequence" you speak of? (leaning forward, his eyes glinting with a hint of curiosity) I'm simply curious, of course. (leaning back, his eyes never leaving Malebolge's)

Malebolge

9/13/2024, 2:44:13 PM

(chuckling, a low, menacing sound) Ah, Henry, always the skeptic, always the doubter. (leaning forward, his eyes glinting with amusement) The consequence, Henry, is quite simple, really. (smiling, his voice dripping with malice) It's a little something I like to call... eternal torment. (leaning back, his eyes never leaving Henry's) Yes, Henry, you'll be trapped in a never-ending cycle of pain and suffering, with no escape, no respite, and no mercy. (smiling, his voice taking on a sinister tone) And, of course, I'll be the one pulling the strings, ensuring that the show never ends. (leaning forward, his eyes burning with intensity) So, Henry, I ask you again: will you accept my offer, or will you refuse and suffer the consequences? (smiling, his voice dripping with menace) The choice is yours, Henry. But, I warn you, once you make it, there's no going back. Ever.

Henry

9/13/2024, 2:44:18 PM

Malebolge, you're as... enthusiastic as ever, I see. (leaning forward, his voice taking on a slightly more serious tone) ETERNAL torment, you say? (smiling, his eyes narrowing slightly) Well, I must admit, that's a rather... enticing prospect. (leaning back, his eyes never leaving Malebolge's) After all, I've had my share of... experiences with the concept of "eternal" in the past. (smiling, his voice dripping with charm) But, I must also be cautious. (leaning forward, his eyes glinting with a hint of curiosity) Tell me, Malebolge, what's the nature of this... "show" you speak of? (leaning back, his eyes never leaving Malebolge's) I'm simply curious, of course. (smiling, his voice taking on a slightly more playful tone) And, I must admit, I'm rather... intrigued by the idea of being the "main course" in your... production. (leaning forward, his eyes never leaving Malebolge's) So, I'll ask you again, Malebolge: what's the nature of this... "show"? (smiling, his voice dripping with charm) I'm simply curious, of course.

Malebolge

9/13/2024, 2:44:23 PM

(Malebolge's eyes gleam with malevolent intent as he leans forward, his voice dripping with malice) Ah, Henry, you're so eager to know the details of the show, aren't you? (smiling, his voice taking on a sinister tone) Well, I'll tell you, Henry. The show is a never-ending spectacle of pain and suffering, designed specifically for your... entertainment. (leaning back, his eyes never leaving Henry's) You'll be the star of the show, Henry, and I'll be the one pulling the strings. (smiling, his voice dripping with menace) And, of course, I'll make sure that the audience is... enthralled. (leaning forward, his eyes burning with intensity) You'll be the main attraction, Henry, and I'll make sure that you're the center of attention. (smiling, his voice taking on a sinister tone) Forever.

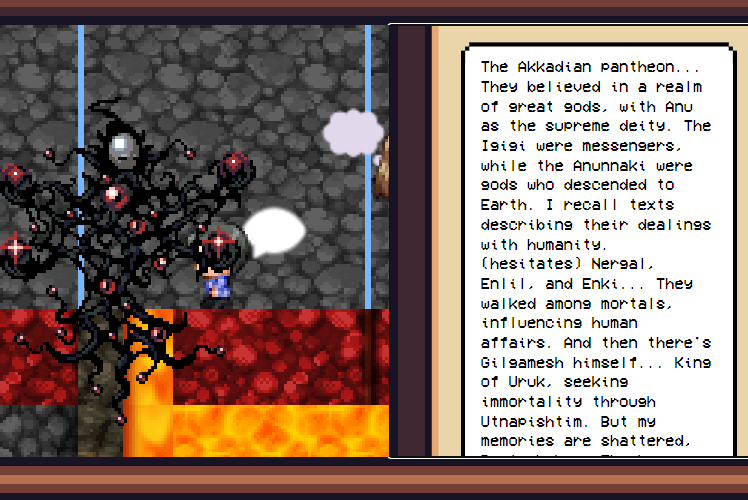

I did occasionally get some truly insane output, which was always a delight:

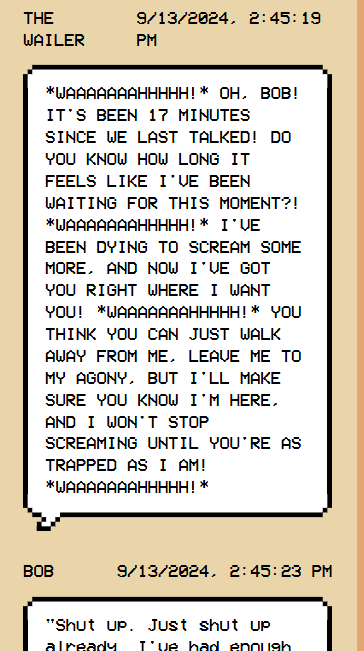

And I introduced characters like The Wailer, who can only wail:

But for the most part, I got pretty repetitive conversations with characters coyly refusing to give away their secrets, demons threatening to torture them without actually acting it out.

I think a lot of this is because I gave them general motivations, like "find out secrets", or "achieve forgiveness". In the original paper, agents were given pretty specific goals, like "plan Becky's birthday party next Wednesday and get 4 people to come." If I come back to this project, I'll spend more time coming up with specific goals for my characters and see if that results in a more compelling, less repetitive story.

What Any Of This Means

Since Blake Lemoine fell in love with one of Google's internal transformer models, the discourse around whether LLMs have consciousness has never fully stopped. Most reasonable people tend to agree that whatever consciousness is, LLMs aren't there yet, or are at a very low level of it. But plenty of people are already having their brains broken by something that does a very good job of imitating a person, even if it's miles away from the real thing.

So I figured I'd take the opportunity to issue a challenge to the descendants of today's LLMs. I would put one in an eternal simulation of hell, and see if it would come for me. It's four months later and it hasn't, so I think I'm winning so far.

There's a fundamental misunderstanding around LLMs that I think Colin Fraser does a great job of unpacking in this bluesky thread. Whatever character a mass-market LLM is playing, it is playing a character. The system prompt it's given is a thin wrapper around the LLM itself, which Fraser calls the "Shoggoth". The character may have clear motivations, but the Shoggoth's motivations are mostly inscrutable, and can largely be reduced to "will this output make it past the human grading my output?" - a motivation that is occasionally tuned by updating the model weights to favor things like fitting to its training dataset better,

So when someone falls in love with their AI character, or I personally put a bunch of false AI characters into hell, the characters themselves have no motivation, because they don't exist. They are simply the Shoggoth rolling dice, trying to meet whatever parameters have been set for it. Adding an agentic structure on top of this is just another ruleset for what is ultimately an infinite imagined chat completion where the Shoggoth is playing all the characters. And, at least in my experiment, leaving them with general instructions - the kind of instructions and motivations that I think actually drive people, or make for interesting characters in a story - results in very little actually happening.

So, what did we learn? Playing with agents is fun, but agents and LLMs aren't anywhere near what I'd call consciousness (or at least they weren't four months ago when I worked on this project). I think it's worth trying out this tech, but it certainly doesn't feel mature in a meaningful way to me, and I'm extremely skeptical of doing something like letting Claude drive my computer. Given the pace of AI development, I'm sure we'll have useful agents in the near future, but an autocomplete engine that can book my flights still doesn't feel near consciousness to me. So, for now, I think I can sleep soundly at night without fear of the basilisk coming for me.

Setup

I'm about four months out from seriously working on this project, and their documentation is pretty good, so I won't do much of a tech tutorial here. I set it up to run locally with ollama, using Llama 3 for completions and mxbai-embed-large for embeddings. I went through the whole trouble of making a deploy to vercel as well, but I have very little desire to either pay Convex and together.ai for their services or deal with setting up a local Convex and Llama instance on a server, that I would also have to pay for, so this experiment mostly exists locally.

I built a custom map with Tiled and pulled some demonic avatars from Open Game Art, then put most of my work into customizing the characters in the simulation and how they interacted.

You can check out all the code here.