Just Because It's Useful Doesn't Mean It's Good

Independent of yesterday's dive into a "useful" application of AI, discourse erupted on Bluesky over Hank Green saying he didn't think the "useless" critique holds water anymore. For the most part people are very angry at the perception of ceding any rhetorical ground to Big AI, which is a fair position to take. Green also put it in a fairly condescending "just asking questions" kind of way, which doesn't necessarily help his case. I don't love the phrasing but there is something to his point: people are finding utility in these tools whether we like it or not, and I think the effort to understand why people use something you're critical of is worthwhile rather than shouting it down on principle.

The problem, though, is that Green doesn't explain what "useful" actually means in this context. The lie we are being sold is that AI is revolutionary and is going to take over everything, while once the rubber hits the road its impact usually falls somewhere between "annoying" (in the case of, say, AI search results) and "actively catastrophic" (using ChatGPT to decide tariff rates, or mental health chatbots that end up telling people to kill themselves). Whatever usefulness it has is in comparatively narrow and specific applications, and it's disingenuous to call the consumer applications of AI "useful" when they are, so far as I can tell, entirely harmful.

I think the biggest problem with how we talk about AI is that, in almost every public discussion of it, a whole lot of disparate technologies and applications are condensed into a single monolithic concept. I actually used to make a point of refusing to use the term for this reason, but decided to stop dying on that hill after this field went from a niche interest to something my uncle wants me to explain at Thanksgiving dinner. Being critical of "GenAI" gets a bit closer to the issue, but it's still extremely reductive. I'm not going to use today's post to dive into how LLMs and diffusion models are wildly different things, but suffice it to say it's a problem.

The thing I'm interested in today is "usefulness", and I think the issue in how we talk about AI in those terms is the extremely wide gap between the specific cases where it is actually useful and its marketing framing as a technology that can and should be applied to almost any consumer use case.

From the side of people in fields where AI is actually useful, I think it's easy to fall into the assumption that everyone uses tools the way you do. I was guilty of this yesterday! Deep Research feels revolutionary to me because I spend so much of my time using search engines to research and solve specific code problems. As was pointed out to me by multiple people, most search traffic is just to find a specific web page, answer a simple question, or because people are too lazy to type out an entire URL. A multi-step agentic framework is overkill for that.

The same applies to the cases where I genuinely do think it's useful in programming: repetitive tasks and boilerplate generation are a huge part of a programmer's job that you really shouldn't have to do more than once by hand, and I see no particular issue with speeding up through automation of any kind. Generally, as I've said before, I think it's a great tool to speed things up once you understand something well enough to know what it does. I see no issue with using a calculator once you've learned your times tables. But this isn't how AI is being sold to the mass market. The question becomes a lot more fraught when you're using an LLM to correspond with actual human beings and not with a computer, or to solve problems that require thought and care. An LLM messing up the structured data in an HTML template while I'm working on it in a dev environment is significantly less of a problem than a ChatGPT-written brief with incorrect information being filed in court.

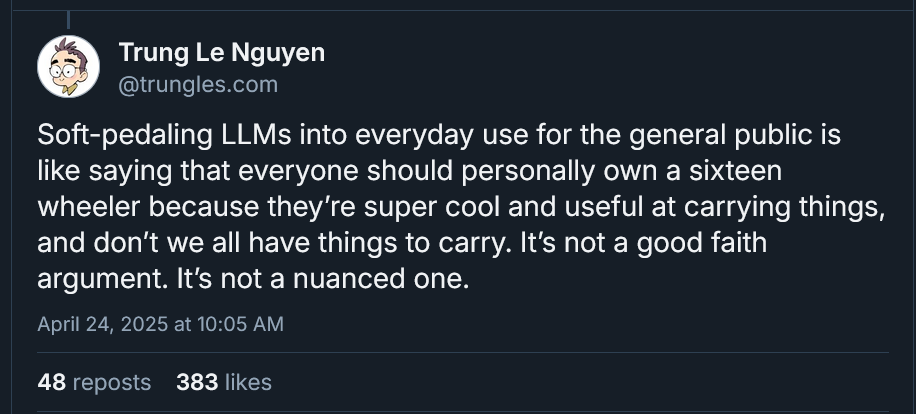

There's a certain naivete to the way "experts" think about AI. The sixteen-wheeler analogy is a good one. Just because you are a senior engineer and can use the tool within a context where its use is safe and helpful doesn't mean a junior engineer will have the same self-control. People use tools differently, and if they don't understand how the tool is best used well enough, they'll take the path of least resistance: until you run up against a vibe-coded bug that wipes your production database, chances are you'll just keep vibe coding. But again, I think most people tend to assume the way they use things is the right or normal way. If you're only thinking about your narrow use case and not about how high schoolers are using LLMs to speedrun illiteracy you might be significantly less worried about their impact.

From the point of view of a normal person, on the other hand, it's reasonable to assume these tools are entirely useless when the vast majority of the use cases you're exposed to are the bad ones. I guess there's a deeply cynical argument to be made that having an LLM write your emails is useful, and I don't necessarily begrudge people using them to write cover letters or whatever, but the vast majority of common tasks that LLMs are any good for are ones that you shouldn't have to do in the first place. Using an LLM to actually replace human connection, communication, and creativity is the act of a stunted soul. I suppose plenty of people are perfectly willing to become incurious and incapable of independent thought or creation, but those people are wrong. Useful is not synonymous with good, but you have to draw the line somewhere. Nobody says that getting blackout drunk is a useful way to ensure you crash your car.

The loudest pro-AI voices tend to fall into the deeply anti-human camp, but I do try to at least listen to the "experts" that I think are coming from a genuine place. But I don't think it's reasonable to expect a layman to understand or even care why these things might be useful to an engineer or a scientist. And as long as AI is miscast as a technology that everyone should be using this won't matter much - a thing is what it does, and I'm more inclined to side with the "AI is evil and should never be used" camp until it stops making everything worse. The marginal efficiency improvements I might get out of specific applications of it aren't a hill I'm willing to die on. At this point I feel the same way about AI that I do about cars: sure, it can be useful in the right hands, but it'd probably be better for the world if it was banned entirely. I think it's valuable, especially as a technologist, to keep making an effort to understand these tools and how they're used. If you want to critique something, it's good to actually understand how it works. But we should never lose sight of the fact that we're playing with a loaded gun.

The best-case scenario for me is that Ed Zitron is right and the AI bubble explodes. Having been alive long enough to see plenty of tech companies that will likely never turn a profit become load-bearing pillars of the economy, I'm not convinced that it'll happen, but I hope I'm wrong. If we can come back to earth a bit and this tech is no longer associated with its worst possible applications and being force-fed down everyone's throat, we might be able to have a nuanced conversation about when and where it's actually useful. But until that happens I think it's probably for the best that its critics are as loud as possible.