It is rich cynics trying to make something lifeless grow in the way that living things do, and lock the dying present they rule in for the foreseeable future by effectively removing everyone from it but them. They are impatient not just because they are high-handed and avaricious, but because they know that the only future they can rule in the way they want is one that is passive, stupid, small and shrinking.

David Roth, excellent as always, on CES in Defector. I do think it's easy to get myopic about AI as someone who exists mostly in the spaces it does have genuine utility in (programming, games, and digital art) and thus is something to fear. This stuff is still mostly useless in practice! Roth also raises a point I think isn't made enough (because I have made it a bunch and no one listens to me). Most of the run-of-the-mill uses of AI as it is currently are the various and sundry tedium of life in late capitalism, things we shouldn't have to do in the first place: filling out forms, writing cover letters, desperately trying to get someone to fix your medical bills.

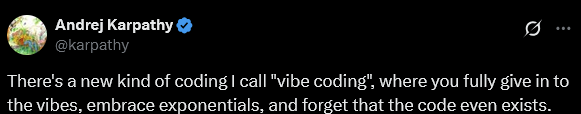

A point Roth doesn't make, but one I think ties into the quote above, is that by putting their own offal into the well of data they're drawing from, AI companies are effectively freezing usable data at around the year 2023. So much of the internet is already generated slop, and it's so difficult to actually determine which data is usable, that bot-free datasets can't stay up to date. The rich are freezing AI's knowledge of the world at the dying present, functionally preventing its growing from the same means it was created. I gave a somewhat tongue-in-cheek talk about this last year, and while I'm not sure how well some of the points I made there will hold up, I do think this is something to watch. Synthetic data and curated datasets may be a way out of this hole, and maybe the slop will get good enough that it can train on its own output (model distillation is a big thing right now) but I can't help but question how far that can take us. How useful is an LLM that can't grow at the pace human culture does? What will come of them endlessly consuming their output? Or will the proliferation of LLMs prevent us from growing at all?