The Death of Learning

I've been thinking more about vibe coding lately and getting pissed about the whole concept. I think there's something deeply wrong with the mindset behind "prompt, don't read the code, don't think about it." I have written in the past about how artificial intelligence is a tool that accelerates the worst impulses of capitalism, and vibe coding is the latest iteration on what is possibly the one I hate the most: sacrificing understanding for efficiency.

Here's the thing. I like learning. I know it's very east coast liberal arts elitist of me but I think there is inherent value in putting in the work to comprehend something new. So I would generally rather produce something slowly and come to understand the tools I am using than prompt a black box to make it for me.

I was talking to a friend recently about when, if ever, LLMs are a net positive for engineers. The people who have found uses for them in their workflows that I respect tend to already be experts in their field. This is the biggest issue with their widespread adoption. Junior engineers come to rely on LLMs, never actually learn anything, and never progress as programmers. As a mid-level engineer, I'm usually working on problems that either LLMs aren't very good at, or that are new enough to me that I want to learn them. The only situation where LLMs are useful without costing you the process of learning and understanding something is one where you don't need to learn anything new. So the people who are likely to get the most value out of LLMs are the people who have already struggled through learning the things that LLMs do easily.

What does this look like in practice? I think LLMs are a halfway-decent solution for generating boilerplate or, I dunno, writing HTML, but any time you're entrusting application logic to them I think unless you have immense domain expertise (and really good self-control) you'll eventually back yourself into a vibe-coded corner where you don't understand what's happening. Most of the time I am not convinced that LLMs are faster or better than a well-informed person, and the exceptions to that are usually when they're in the hands of a senior-level or higher engineer.

So you have to learn a lot to use an LLM well. And even then, I think you ought to be pretty sparing in its use - there are plenty of testimonials from people who found that LLM use resulted in rapid deskilling. But I guess all of this depends on my assumption that learning is an inherent good, one that less and less people seem to share.

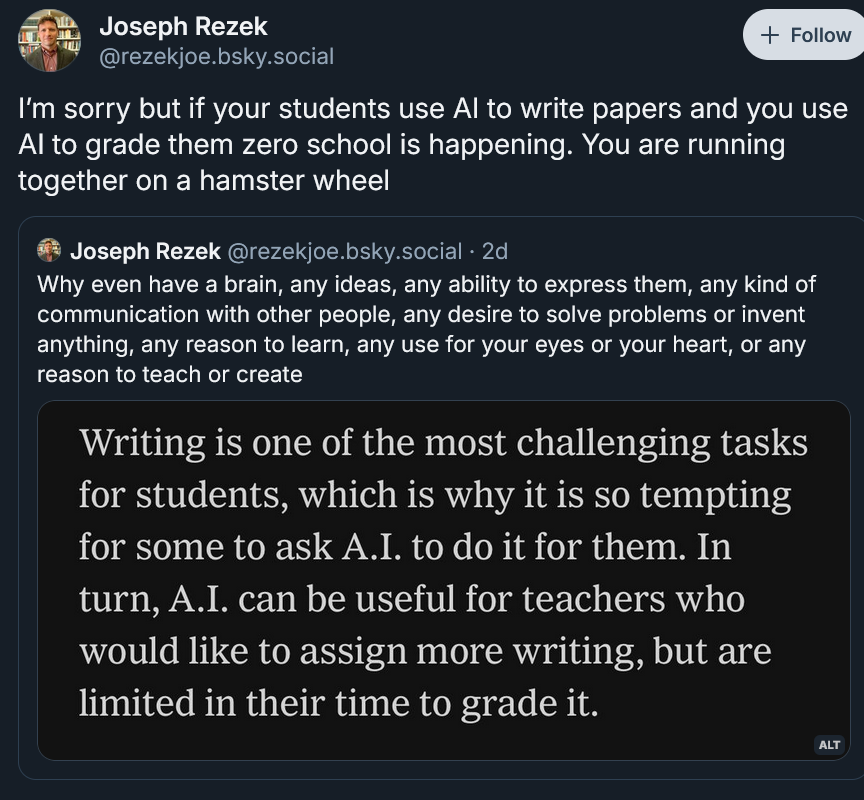

I am coming to accept that being pro-learning is a losing battle. Literacy rates are dropping and people are increasingly willing to outsource the acts of thinking and writing to a subscription service. My preference for text-based media puts me in a significant minority. I've tried to be open-minded about the widespread adoption of LLMs but in practice they seem to be significantly different from any other "second brain" out there: for most people, they don't seem to open any paths to new types of thought, they just help you produce bullshit faster.

Although it might well be the case that most people simply prefer the path of least resistance, I don't want to blame human nature here. I would like to believe that most people want to learn and think and write and know. But if you put those people in a system that incentivizes efficiency over understanding, they'll eventually fall in line. If the assumption is that we have to work the exact same amount but AI will make us more productive, then of course people will give up and start churning out slop.

I guess on the bright side in five or ten years maybe Paul Graham will turn out to be right and people for whom reading, writing, and thinking are deeply important will be in high demand. But that's cold comfort in the face of the despair I am starting to feel. I have long held that 90% of what LLMs are good at is stuff that you shouldn't have to do anyway: meaningless paperwork, tedious tasks, all the endless humiliating hoops of living in a world of bullshit jobs. Unfortunately it is now also good enough, most of the time, at all the stuff that makes human life worth living. I don't think "good enough" is what we should aspire to, but in a world where the only higher good is increased efficiency, who cares if we're sacrificing curiosity as long as we're sending out the emails faster.

There are pro-AI people that I deeply respect and think are using it to great effect. Most of these people fall somewhere between an expert and a literal genius. But there's a naive assumption that a lot of them seem to hold: the belief that everyone can be like them. The belief that AI can, for everyone, be a tool that simply makes an already incredible mind work faster. But for most people, given the incentives we live under, the temptation to outsource their mind entirely is too great to resist. I would like to live in the world where AI allows all of us to work less, to live more, to pursue the life of the mind. But we don't live in that world, and the moral arc of the one we live in bends towards drudgery.

I hope I am somehow wrong about this, but I doubt it. Barring a massive change in the moral and economic bent of our society, the incentives are all lined up for people to continue surrendering themselves to the illiteracy machine apace. The world of slop I've been worried about for years continues to grow. At this point all I can really hope for is that, once everything has drowned in it and there's no one left who knows how to fix things, we can build something better.